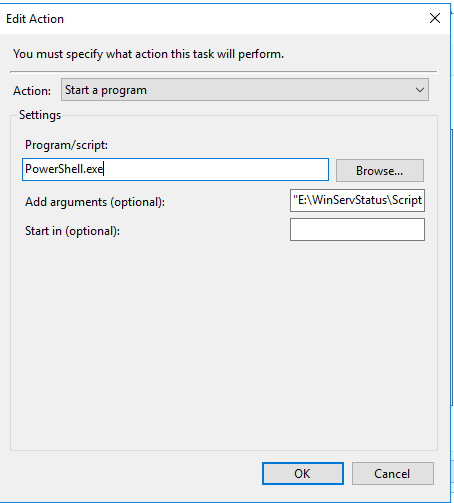

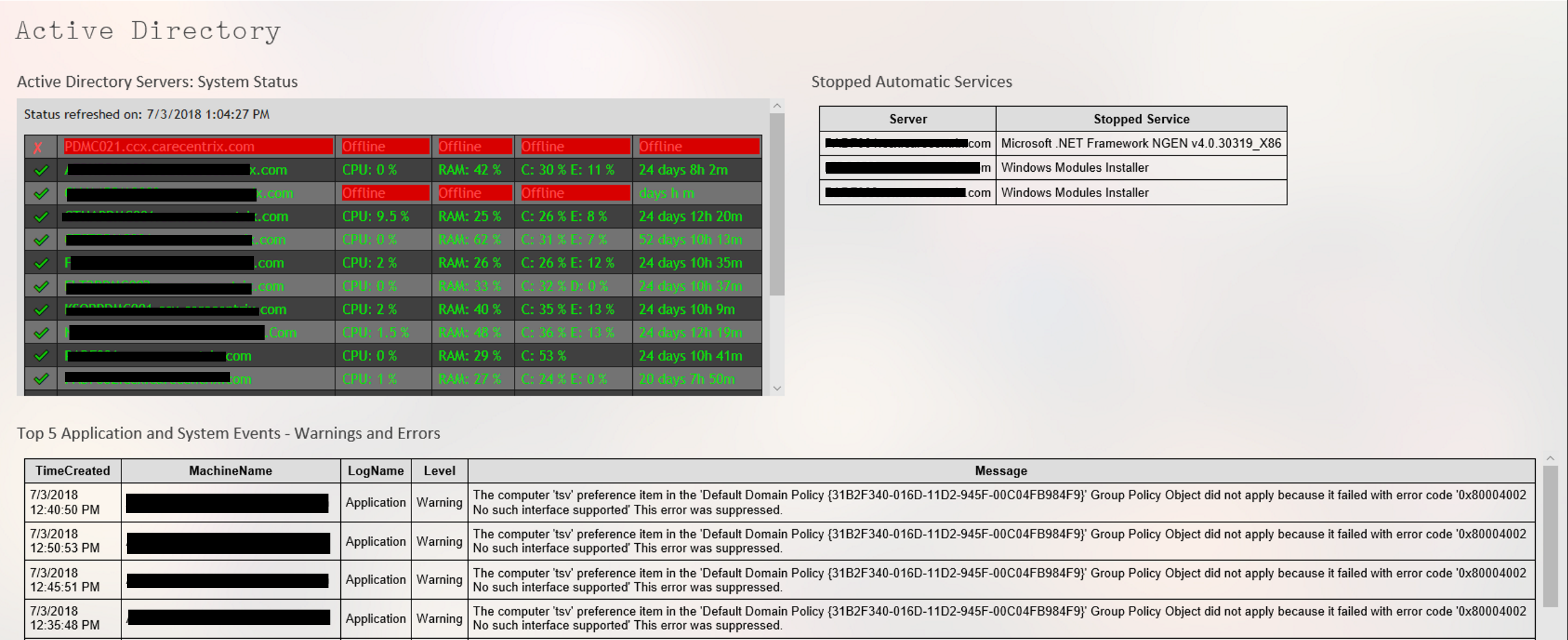

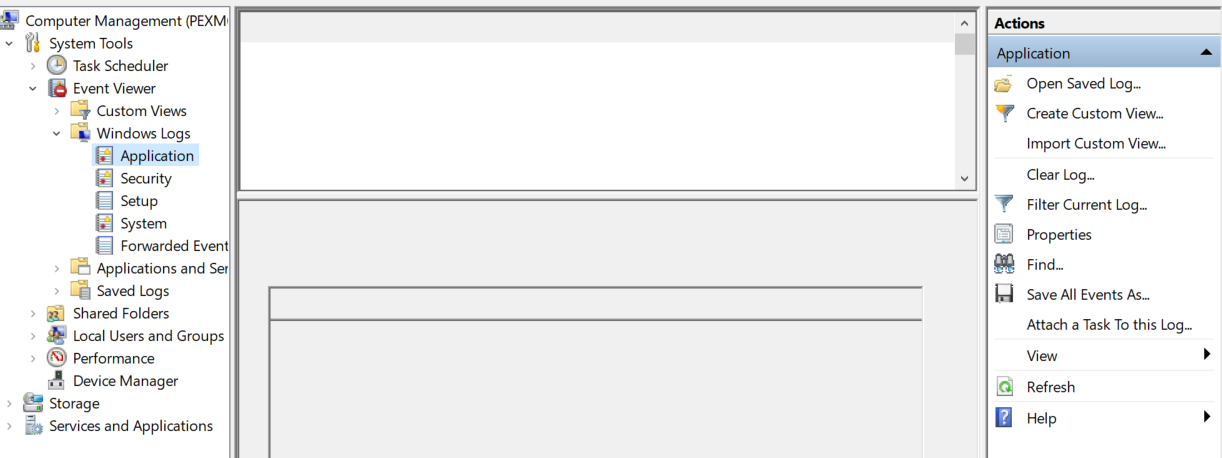

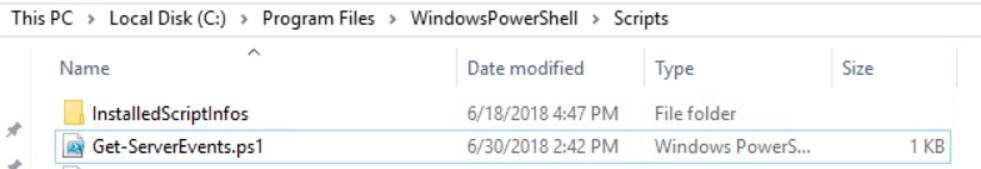

I’ve been caught by an automatic service not starting after system reboots from things like patching. I’ve written several versions of the script below over the years. This is my most recent edition. You’ll need the Active Directory module installed on the system that executes the script.

The code will scan your entire AD for member systems with a Windows Server operating system. It will present you with a list to choose from. It will then test RPC (135) connectivity and scan the automatic services on those that are reachable. The script will report any servers that do not have a status of “running” along with any that were not reachable.

<#

.SYNOPSIS

Checks Acitive Directory Memeber Servers for Automatic Serices that are not currently running.

.DESCRIPTION

Dynamically generates list of Active Directory Servers.

Uses WMI to examine the status of all services set to automatically start on selected servers.

Filters common automatic services that do not stay started by default: mapsbroker, cdpsvc, gupdate, remoteregistry, sppsvc, wbiosrvc,

iphlpsvc, tiledatamodelsvc, clr_optimization, and Microsoft Edge Update are currently excluded from the report.

.INPUTS

Get-ServerAutoSerivcesStatus displays a gridview of selectable Active Directory memeber servers.

Shift+ and CTRL+ select are enabled.

CTRL+A to select all.

Criteria to filter.

.OUTPUTS

System.String. / Gridview Get-ServerAutoSerivcesStatus returns a string showing all status on selected servers running,

or a gridview of the servers and services that are not.

Get-ServerAutoSerivcesStatus also displays a string listing servers that did not respond on TCP 135 (RPC).

.EXAMPLE

PS> Get-ServerAutoSerivcesStatus.ps1

#>

$ErrorActionPreference = "SilentlyContinue"

$Servers = Get-ADComputer -Filter 'Operatingsystem -Like "*server*"' -Properties dnshostname|

Select-Object dnshostname -ExpandProperty dnshostname|

Out-GridView -Title "Select Servers To Enumerate AutoServices. CTRL+A to Select All" -PassThru

$Report = @()

$ErrorLog = @()

$ServersOnline = @()

Write-Host -ForegroundColor Yellow "Please wait, testing connectivity to selected servers....."

Foreach ($Server in $Servers) {

If ((Test-NetConnection -WarningAction SilentlyContinue -ComputerName $Server -Port 135).tcptestsucceeded){$Serversonline += $Server}

Else {$Errorlog += $Server}

}

ForEach ($Server in $ServersOnline) {

$Wmi = Get-WMIObject win32_service -ComputerName $Server -Filter 'State != "Running" AND StartMode = "Auto"'|

Select-Object @{n="ServerName"; e={$server}}, @{n="ServiceName";e={$_.name}},@{n="Status";e={$_.state}},@{n="Start Account";e={$_.startname}}

$Report += $Wmi | Write-Host

}

$Report | Where-Object {($_.ServiceName -notlike "mapsbroker") -and ($_.ServiceName -notlike "cdpsvc") -and ($_.ServiceName -notlike "gupdate") -and

($_.ServiceName -notlike "remoteregistry") -and ($_.ServiceName -notlike "sppsvc") -and ($_.ServiceName -notlike "wbiosrvc") -and ($_.ServiceName -notlike "iphlpsvc") -and

($_.ServiceName -notlike "tiledatamodelsvc") -and ($_.ServiceName -notlike "*clr_optimization*") -and ($_.ServiceName -notlike "Microsoft Edge Update") |

Select-Object @{n="Server";e={$server}}, @{n="Stopped Service";e={$_.displayname}

}

}

If ($Rerport -ne $null) {$Report | Out-GridView -Title "These automatic serivces are not running"}

Else {Write-Host -ForegroundColor Green "All Automatic Services on $($Serversonline.count) reachable servers are started."}

If ($ErrorLog -ne $null) {Write-Host -ForegroundColor Red "These $($ErrorLog.count) servers were not reachable via RPC (port 135)`n `n" ($ErrorLog -join ",`n")}

Else {Write-Host "No connection issues to selected servers detected."}

Pause

Exit