After you have more than a handful of servers on your network, it can be challenging to catch small issues before they take something down and get noticed by users. What you need is a good monitoring tool; one that captures resource consumption, error events, installed software, shows patching status, and that generally helps you keep an eye on your systems.

If your place is like a lot of the businesses I’ve worked for, you probably have a bunch of emailed reports from various systems that amount to SPAM. You try to watch the emails and texts but they vomit so much useless data all over your inbox that its hard to make sence of it. Important notifications end up lost in the noise.

Purchasing a multi-thousand dollar monitoring tool is not always possible. The more nodes you have, the more expensive a monitoring tool will be. Math says that you won’t need a monitoring tool until you have a lot of stuff to watch, which almost guarantees that a commercial solution it isn’t going to be cheap. Spending money on something that doesn’t directly generate profitable returns can be a challenge for any company but outages hurt customer and worker confidence in your systems. This situation can leave technologists feeling trapped.

As system admins, engineers, or architects, we know that the operating system has and reports most, if not all, of the information that we require. We can connect the MMC Computer Management snap-in or the Server Administrator tool to almost any computer and see its event logs, service status, and more. The problem is, that the data isn’t correlated and filtered in a way that provides us with the up/down, big picture, view that we need to proactively correct small problems before they become big ones.

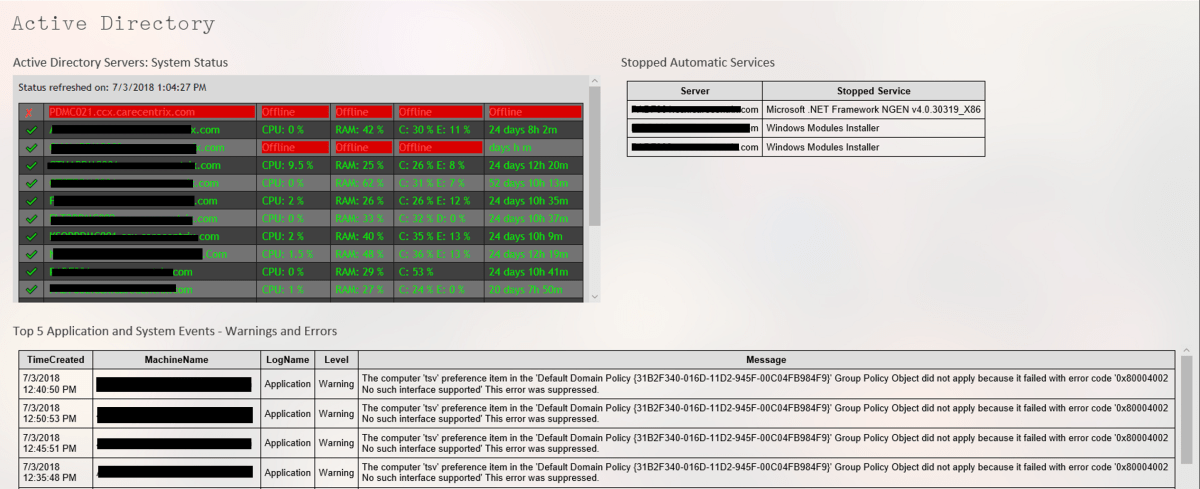

In this series we’ll examine how PowerShell can help gather all of the data we need from every windows system attached to our network. We’ll use it to generate HTML reports that, in the end, will be uploaded to a web hosting engine so that we can see our system’s status at a glance. The solution isn’t going to compete with System Center or Solarwinds but its better than 10,000 unread items in your inbox and it is entirely free (assuming that you have Windows CALS).

I have to break this down into component parts, or the post would be a book. Stick with me and I think that you’ll end up with a functioning monitoring tool. Feel free to take all the credit for yourself. Maybe your boss will give you a raise. Just read my blog once in a while without your ad-blocker turned on and we’ll be even.

First up is the Windows event logs. Microsoft, in all of their geniousnous; has been spitting out the classic Application, Security, Setup, and System event logs for as long as I can remember. The amount of data they collect is impressive. Typically, they are my first stop on any trouble shooting endeavor. We all know the drill; open the Computer Management MMC, right-click on the system name to connect to a remote PC, and then filter for errors and warnings. You can configure them to dump to a database for correlation / indexing but most of us never get that far. We end up logging on to a problem system and manually checking the logs for relevant messages one at time. Stop doing that, you’ll go blind!

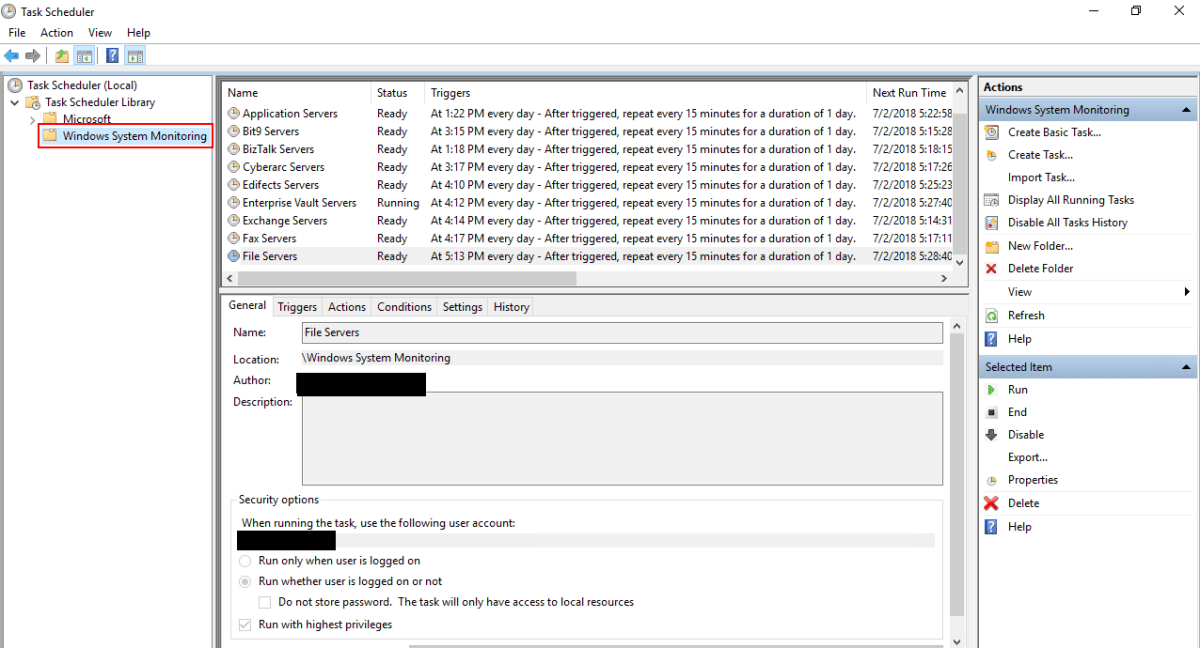

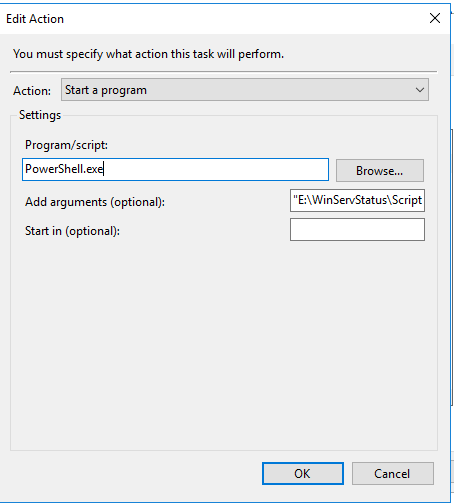

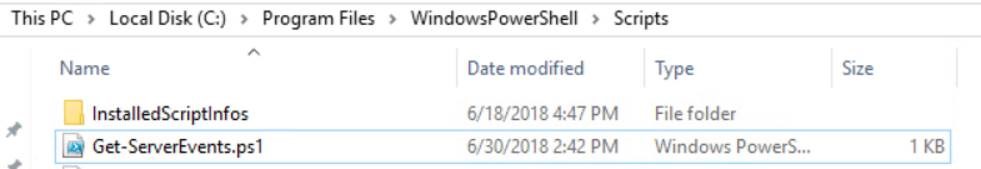

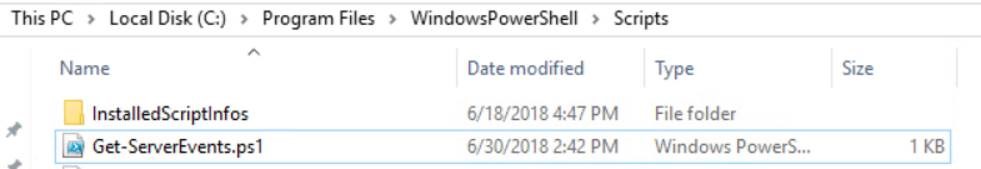

PowerShell will get us the same information, a nice filtered for errors, view of the logs but it can do it in bulk.. The code below is written to be a module that you can call from a scheduled task or from another script. If you’re going to follow the whole series to build my tool you will want to run it as a module. Copy the code and save it as a Get-ServerEvents.ps1 file in the C:\Program Files\WindowsPowerShell\Scripts folder. If the scripts folder isn’t there; make it.

param (

[string] $list,

[string] $outputpath

)

$style = "

BODY{font-family: Arial; font-size: 10pt;}"

$style = $style + "TABLE{border: 1px solid black; border-collapse: collapse;}"

$style = $style + "TH{border: 1px solid black; background: #dddddd; padding: 5px; }"

$style = $style + "TD{border: 1px solid black; padding: 5px; }"

$style = $style + ""

Function Get-Events {

$servers = Get-Content $list

Foreach ($server in $servers) {

Get-WinEvent -ComputerName $server -MaxEvents 5 -FilterHashtable @{Logname ="Application"; Level=2,3;StartTime=(get-date).AddHours(-1)},

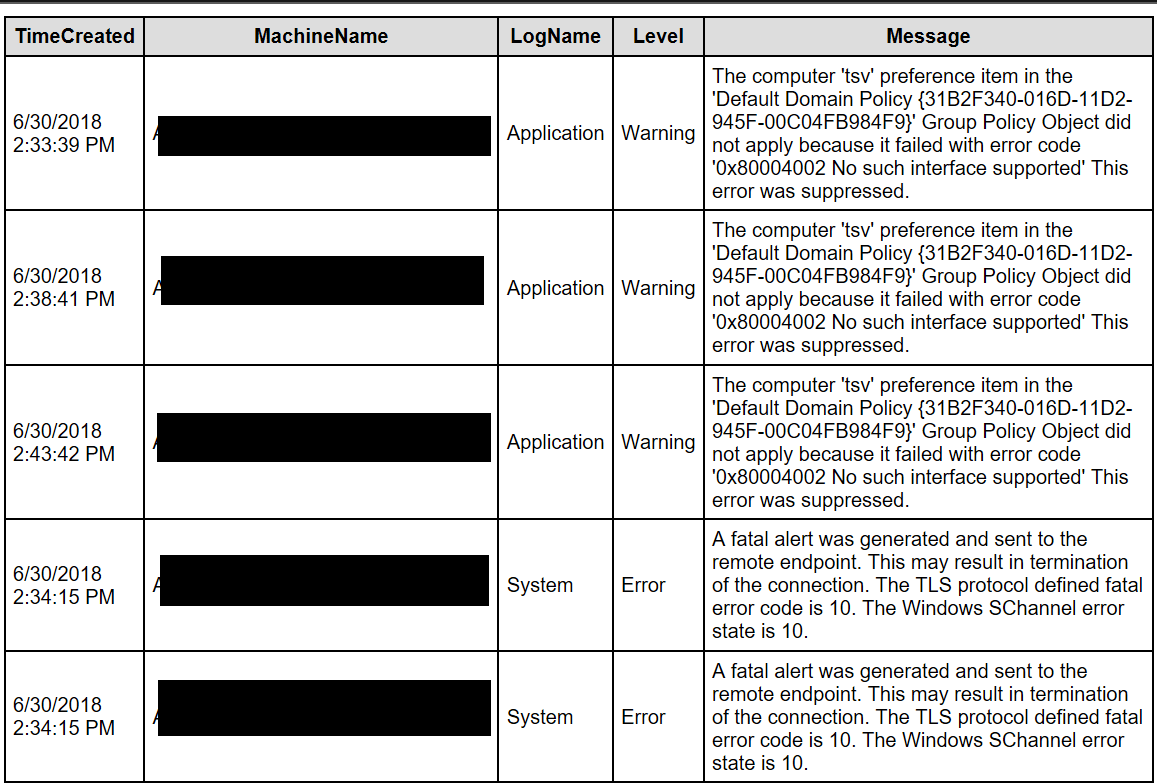

@{Logname ="System"; Level=2,3;StartTime=(get-date).AddHours(-1)} -ErrorAction SilentlyContinue|Select TimeCreated, MachineName, Logname,@{n="Level";e={$_.LevelDisplayName}},Message

}

}

$report = Get-Events|Sort-Object Machinename, Logname |ConvertTo-Html -head $style|Out-String

$report|out-file -FilePath $outputpath

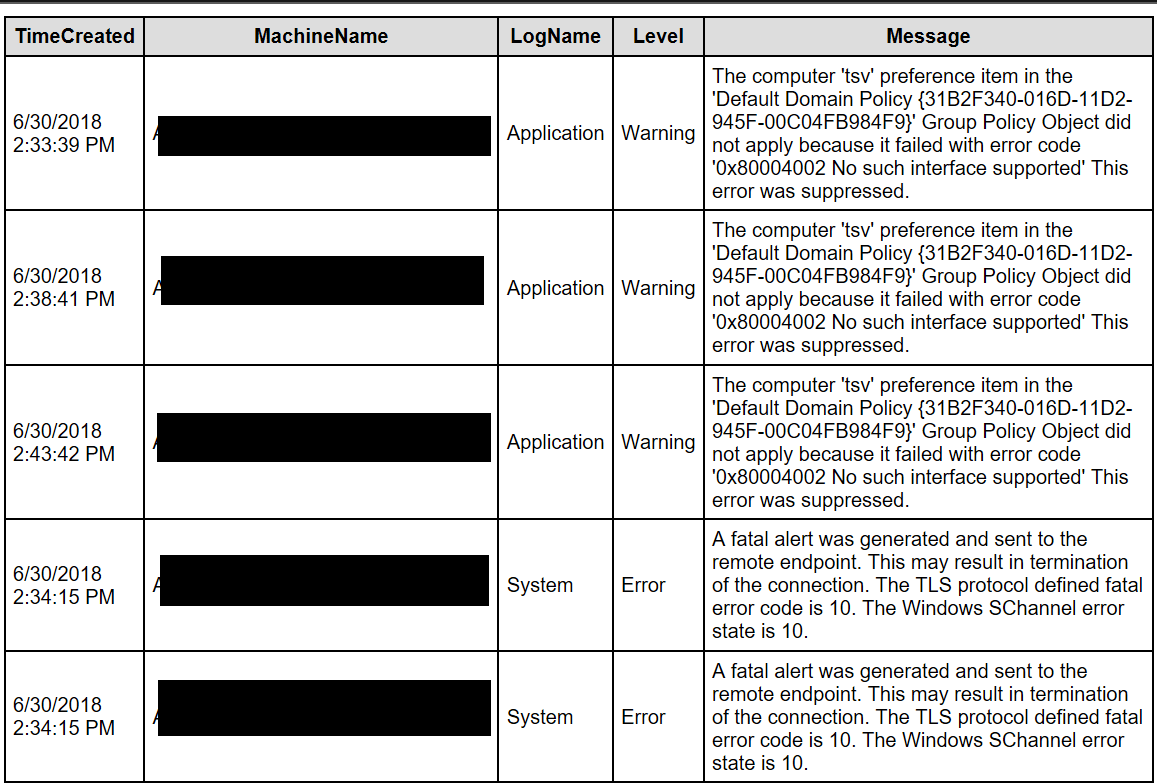

The script will gather the last 5 application and system event log errors and warnings that occurred in the last hour for a list of servers and output them into a basic HTML table.

To run the script, create a *.txt or *.csv file containing the hostnames of the systems you want to see events for and then call or run it with the -list and -outputpath parameters. For example; Get-ServerEvents.ps1 -list c:\users\myname\my documents\ad_serverts.txt -outputpath \\reports_server\myreports\ad_serverts.html

If you don’t want to mess with text files you could also use the OU structure in AD as the source of your computer names. Mine organizational units didn’t line up with the way I needed my reports to look hence the text file parameter. If you don’t have that problem try changing the $server variable.

$servers = Get-ADComputer -Filter * -Searchbase “OU=MyOU, DC=mydomain, DC=com | Select dnshostname -ExpandProperty dnshostname

[/Code]

When you run the report you’ll end up with a nice, neat, HTML file like the one below. It’s easy to attach to an email notification; just add a Send-Mailmessage command to the bottom of the script. If you follow the rest of this series we’ll end up publishing the report(s) to a website.